Links and Notes - January 21st 2025

Well where is VR then?

On one hand you have ESPN using VR to discuss american football plays.

View on Threads

On the other hand you have this dev being super jittery about the future of VR despite actively developing a to be released game.

Alright VR fam. We need to talk.

— Blair Renaud // LOW-FI 🟥🟧⬛ (@Anticleric) January 19, 2025

Quest app sales are down 27% year over year. The FReality podcast just shut down. The visionaries of VR have all jumped ship, from @PalmerLuckey to @ID_AA_Carmack. What we're left with is @finkd and Meta, subsidizing the entire industry, throwing…

So while it looks like mixed signals all around it primarily looks like the VR community is hopeful and has some good discourse and ideas about the long haul here

View on Threads

Especially this one which is a strong thread advocating for the future and the fact that the medium is still in its early days with folks figuring out what the basics should look and feel like. For me, it helps that he works with stress level zero. One of the earliest studios for these games where they really saw the potential for the device even while they were clunky machines tethered to a desktop with tracking devices setup around the room.

Here's one of the tech demos of Brandon (RocketJump I miss youuu) showing it to the folks at Node and Corridor. There are some crazy details in there including the fact that they had to write code to guess where your arms were based on a bunch of other calculations starting from your neck. If I'm not mistaken that's now all part of the SDK layer. If there's anyone who knows about building stuff for the long haul and iterating on what the future of VR could look like, it's them.

Have hope!

The joys of links and notes

My favourite thing about these types of posts is how it changes my relationship with what I consume. There was this post on threads talking about how distractions are there as we consume too much.

View on Threads

For me, having links and notes flips my relationship with the material I consume into something that I'm really thoughtful about. When I read or watch or listen to something I find interesting I find myself really thinking hard about what I want to write about it and how to supplement it with other information.

As an example, yesterday when I was writing about my frustrations on how information gets copied and reformatted without attribution I actually stopped to think about the YouTube video itself. Was this also a copy of another source information? And as it turned out, it was. How much of a copy? Pretty massive. If I was just scanning through, I would have thought to myself "gosh, this X thread is yet another example of information being ripped from other sources" and I wouldn't have thought twice about the YouTube link.

Even in the section that precedes this one, I've linked to so many other threads. I'm forming a web of information purely because I'm transforming it into a written piece of links and notes. It's not just fleeting thoughts.

I use the words fleeting thoughts intentionally here. I've tried to build a similar relationship with things I consume in the past by using a zettelkasten method. And while it does slow things down, I find that it doesn't match my motivations. I do want to write about stuff. But I don't want to turn it into some network of thoughts by default. I don't want to form atomic thoughts or what have you. I just want to distill my thoughts. For me, the links and notes works better.

Lastly, it's really just fun to see these as a kind of time capsule which also shows my progress of understanding of or reaction to a topic which is still evolving.

OpenAI hype, chill

Speaking of topics that are evolving, I wrote about concerns about AGI/ASI yesterday . Today, Sam Altman hops on Twitter to play down the hype with a categorical statement about their progress in AGI.

The hype squad had interesting reactions where my favourite type was where folks felt they had been rug pulled. I will say that I agree with this to some level. Folks linking to this tweet but in all fairness, that ignores the second post in the thread which in my opinion, does not hint at AGI at all. Again though, Sam did say that they now know how to build AGI as they understand it

We are now confident we know how to build AGI as we have traditionally understood it.

and

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word.

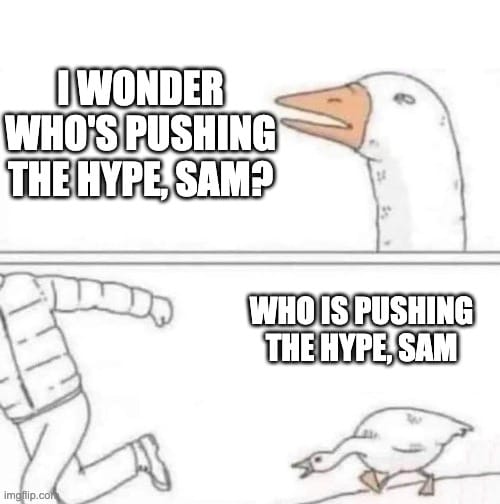

Therefore, I feel that this reaction which is my favourite is entirely justified

What the heck is Deepseek?

Sometimes I realize just how much I'm not following the discourse on a particular topic. DeepSeek is is apparently a language model that is open soruce? I didn't even know of their existence till they launched their reasoning model yesterday which apparently is competitive with OpenAI's o1 reasoning model.

There's stuff in their PDF about the model finding and Aha moment using reinformcement learning and stuff. I can't pretend that I understand any of it. What I do understand is this comment from HackerNews that shares how the model reasoned about a joke. The full reasoning about the joke is in this gist here. I agree with the poster. The joke is rubbish. But reading how it "thinks" through the idea is very cool. Of course we see the edge of the capacity of this model and basically any model which is that it doesn't understand what is actually funny. Even that though, I wonder what would be possible if you fed in a custom prompt that asks it to put in premise, setup, punchline/payoff and details about what makes a punchline laugh worthy, maybe... Just maybe... It might come up with something that's actually funny.

When I started writing this all I could think was, how close are we to the emergence of some kind of intelligence anyways. Maybe it's a few nudges away? Then I read this gist where the model is desperately trying to count how many 'r's there are in the word strawberry. After all it's mulling and genuinely interesting thinking it just crumbles over itself because its token prediction won't let it say that there are three 'r's in strawberry. Literally. It counts the the occurrences one by one and when it comes to the third it says:

R (Third occurrence) – Wait, this can't be right because the word only has two Rs.

I feel like this is the clearest peek into the "mind" of the machine and it's funny as heck.

This blog doesn't have a comment box. But I'd love to hear any thoughts y'all might have. Send them to [email protected]

Previous links and notes (20th January 2025)

Posted on January 21 2025 by Adnan Issadeen